Dominic DiFranzo

Culturally-Grounded Governance for Multilingual Language Models: Rights, Data Boundaries, and Accountable AI Design

Jan 31, 2026Abstract:Multilingual large language models (MLLMs) are increasingly deployed across cultural, linguistic, and political contexts, yet existing governance frameworks largely assume English-centric data, homogeneous user populations, and abstract notions of fairness. This creates systematic risks for low-resource languages and culturally marginalized communities, where data practices, model behavior, and accountability mechanisms often fail to align with local norms, rights, and expectations. Drawing on cross-cultural perspectives in human-centered computing and AI governance, this paper synthesizes existing evidence on multilingual model behavior, data asymmetries, and sociotechnical harm, and articulates a culturally grounded governance framework for MLLMs. We identify three interrelated governance challenges: cultural and linguistic inequities in training data and evaluation practices, misalignment between global deployment and locally situated norms, values, and power structures, and limited accountability mechanisms for addressing harms experienced by marginalized language communities. Rather than proposing new technical benchmarks, we contribute a conceptual agenda that reframes multilingual AI governance as a sociocultural and rights based problem. We outline design and policy implications for data stewardship, transparency, and participatory accountability, and argue that culturally grounded governance is essential for ensuring that multilingual language models do not reproduce existing global inequalities under the guise of scale and neutrality.

Artificial intelligence in communication impacts language and social relationships

Feb 10, 2021

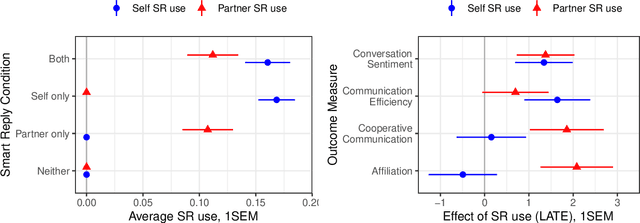

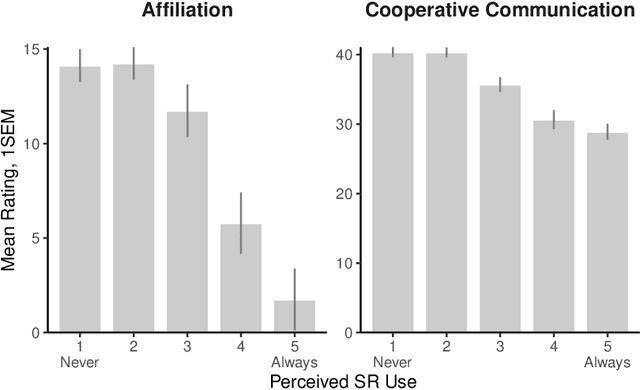

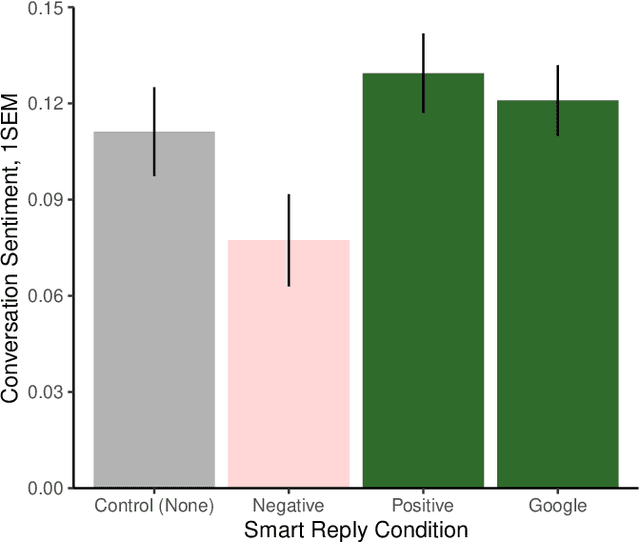

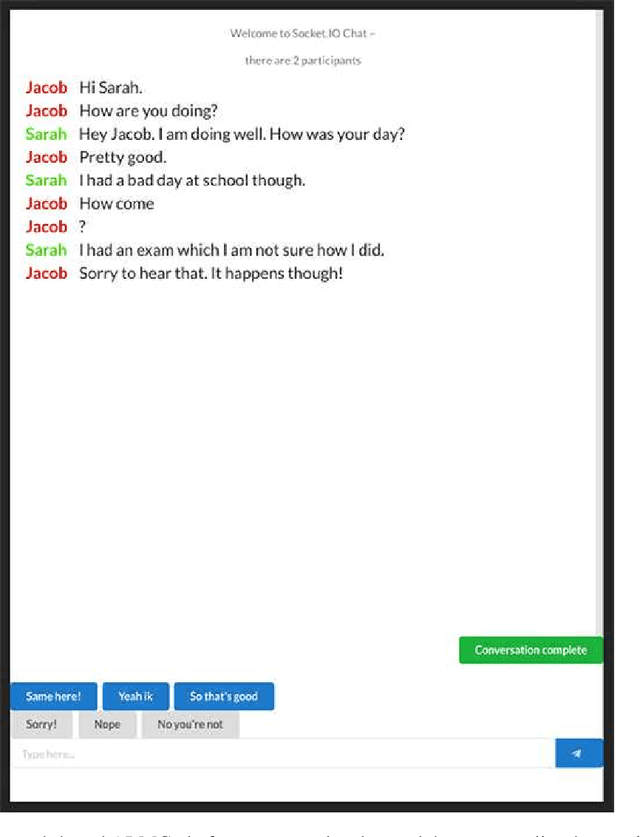

Abstract:Artificial intelligence (AI) is now widely used to facilitate social interaction, but its impact on social relationships and communication is not well understood. We study the social consequences of one of the most pervasive AI applications: algorithmic response suggestions ("smart replies"). Two randomized experiments (n = 1036) provide evidence that a commercially-deployed AI changes how people interact with and perceive one another in pro-social and anti-social ways. We find that using algorithmic responses increases communication efficiency, use of positive emotional language, and positive evaluations by communication partners. However, consistent with common assumptions about the negative implications of AI, people are evaluated more negatively if they are suspected to be using algorithmic responses. Thus, even though AI can increase communication efficiency and improve interpersonal perceptions, it risks changing users' language production and continues to be viewed negatively.

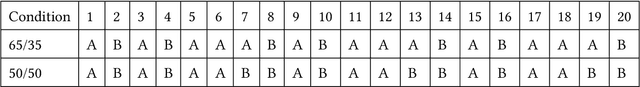

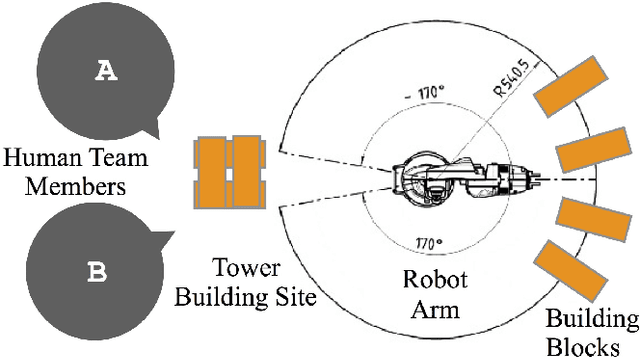

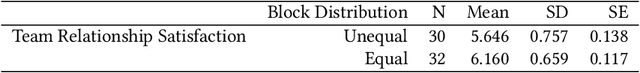

Robot Assisted Tower Construction - A Resource Distribution Task to Study Human-Robot Collaboration and Interaction with Groups of People

Dec 22, 2018

Abstract:Research on human-robot collaboration or human-robot teaming, has focused predominantly on understanding and enabling collaboration between a single robot and a single human. Extending human-robot collaboration research beyond the dyad, raises novel questions about how a robot should distribute resources among group members and about what the social and task related consequences of the distribution are. Methodological advances are needed to allow researchers to collect data about human robot collaboration that involves multiple people. This paper presents Tower Construction, a novel resource distribution task that allows researchers to examine collaboration between a robot and groups of people. By focusing on the question of whether and how a robot's distribution of resources (wooden blocks required for a building task) affects collaboration dynamics and outcomes, we provide a case of how this task can be applied in a laboratory study with 124 participants to collect data about human robot collaboration that involves multiple humans. We highlight the kinds of insights the task can yield. In particular we find that the distribution of resources affects perceptions of performance, and interpersonal dynamics between human team-members.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge